Int J Drug Res Clin. 2:e8.

doi: 10.34172/ijdrc.2024.e8

Perspective

Publication Bias and Systematic Error: How to Review Health Sciences Evidence

M. Reza Sadaie 1, *

Author information:

1NovoMed Consulting, Biomedical Sciences, Germantown, MD 20874, USA

Copyright and License Information

© 2024 The Author(s).

This is an open access article distributed under the terms of the Creative Commons Attribution License (

http://creativecommons.org/licenses/by/4.0/), which permits unrestricted use, distribution, and reproduction in any medium, provided the original work is properly cited.

Please cite this article as follows: Sadaie MR. Publication bias and systematic error: how to review health sciences evidence. Int J Drug Res Clin. 2024; 2: e8. doi: 10.34172/ijdrc.2024.e8

Accumulating health sciences evidence calls for questions to de-bias the potential errors in datasets and systematic reviews to ensure the safety and effectiveness of a medication or therapeutic intervention. Publication bias and systematic error can create context-specific inequities and safety concerns and distort risk predictions. Thus, it will be of paramount importance to de-bias the data interpretations and reduce the burden of error in scientific evidence.

In a recent perspective, the author rightfully asserts that healthcare providers should be held accountable for providing reassurance on risks associated with the therapeutics or procedures.1 One sensible approach is to delve into possible causes of the prevalent systematic error issues, particularly in so-called publication bias in health sciences literature. These challenging problems also apply to systematic reviews and meta-analysis.

An overview of how to read medical sciences evidence was previously introduced,2 referring to a credible textbook, including guidelines, procedures, and detailed explanations, in part, on the bias and dataset limitations.3 Bias is defined as a condition that produces results that depart from the true values in a consistent direction (i.e., systematic error), and unbiased refers to the lack of systematic error.3

In addition, chance can produce random error, as opposed to bias, in a way that can either favor or compete with the study hypothesis, but the outcome may be unpredictable. Both bias and chance can produce variations in the study and control group outcomes.3 Reasonable solutions regarding how to de-bias and reduce systematic error could be to take preventative measures to overcome the pitfalls in data collection/processing and publications. Accordingly, this study aimed to highlight and discuss the basic and relevant established procedures to address these concerns.

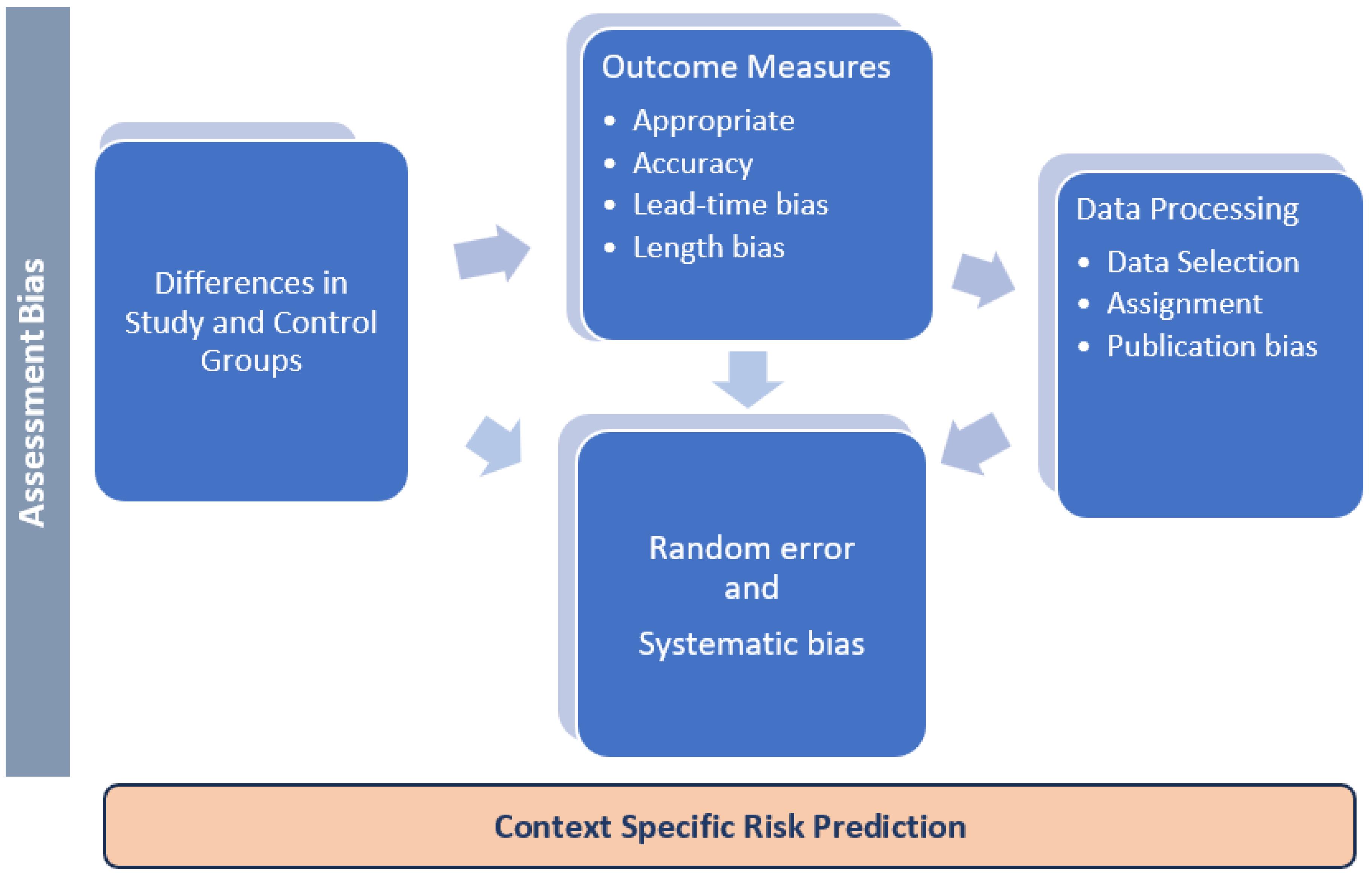

To de-bias the outcome, the strategy of approach should begin by stating the predefined hypothesis and a valid scientific rationale(s) before evaluating evidence-based outcome measures.3 The most common bias that could plague the systematic reviews may be largely rooted in the bio/medical sciences datasets processing. It may include a single cause or a multitude of random/systematic errors (e.g., dataset collection, data analysis, and data extrapolation and interpretation) based on reasonable assumptions and variables such as differences in the target population and extrapolation to a risk group, a community, or population. In addition, inter-observer variation (and intra-/inter-assay variations), as well as inherent bias such as lead-time (onset of symptom to progression) and length-of-study (on rapidly progressive disease vs. slowly progressive disease), can all affect the outcomes. Furthermore, the condition and process in these variables may confound the context-specific risk predictions, as illustrated in Figure 1.

Figure 1.

The Main Sources and Types of Data Processing and Publication Bias

.

The Main Sources and Types of Data Processing and Publication Bias

Taken together, an insufficient evaluation of the study group characteristics or special populations could result in unreliable outcomes. For instance, one may reason that the strategy of safety and efficacy assessment in hospitalized COVID-19 patients could affect the therapy outcome.4 One may also maintain that individuals with coronary artery disease and/or diabetes presumably have underlying risk factors that affect the outcomes. In addition, while making distinctions among the magnitude of association, confounding variables and effect size can influence the outcome, and neither the contributory nor necessary/sufficient cause(s) can arguably be the sole root cause(s) affecting certain outcomes.5 These factors are important for evaluating causality, risk assessment, and context-specific risk prediction.

The likelihood of publication bias, if unresolved, can ultimately generate and perpetuate uncertainties, particularly when missing data are purposefully excluded. Meta-data analyzers usually justify the missing data as outliers and exclude them from the calculations and final assessment. This can be acceptable when the investigators note that the inclusion of the missing data makes no difference in the interpretation of the outcomes.

There are numerous other standards on how to conduct a systematic review and consistent data analysis to prepare and publish unbiased reports. To reduce bias, it will be beneficial to first create a checklist(s), based on consensus and standard guideline(s), for reading, assignment, data collection/extraction, analysis, and data extrapolation and interpretation.

For instance, for a cross-over study, if feasible and conducted carefully, the same individuals are compared with themselves (e.g., on and off medication/intervention study) under certain conditions which may be advantageous compared to the pairing of the individuals in a cohort study. Considering these issues can result in an effective approach to avoid selection bias, but it may not be possible to reassess the true value of a medication, for instance, in groups after overmatching or requiring further clinical investigation.

While it is important to state the study hypothesis and scientific rationale/criteria for data selection, it is equally important to provide a complete explanation/justification for data extrapolation and limitations of research.

I suggest creating specific checklists, similar to those in Boxes 1-4, or according to methods as described,3 with a set or series of questions. This will in part reduce the common pitfalls in the publication bias, particularly when a large number of variables are involved or the outcomes are suboptimal or invalid, but this could be unreasonable for a disease with high prevalence. In that case, odds ratio estimates can overvalue the actual relative risk and disproportionately exaggerate the safety and effectiveness of the medications, biologics, and/or devices.

Box 1. Study Design and Selection Bias

Study Design

simple

-

□ Is the hypothesis predefined?

-

□ Is the process for outcome predetermined?

Selection Bias

simple

-

□ Are the study designs, outcome measures, and characteristics free from inherent bias? (Constraints in the inclusion/exclusion criteria).

-

□ Is the prior matching sufficiently discussed?

-

□ (Concerning age, gender, race, lifestyle, underlying risk factors such as diabetes, vital organs risk factors, exposures to other hazards, pathogens, and/or medications).

-

□ Are the statistical testing measures reflecting the clinical importance? (Relative to the outliers and variables)

Box 2. Assessment Bias

Assessment Bias

Appropriate: Is the study outcome (s) relevant to the study question?

simple

-

□ Were valid outcome measures implemented?

-

□ Were all individuals’ outcomes assessed?

-

□ Are outcomes unaffected by the process of observation?

-

□ Were the correct timelines carry-over effect of treatment considered?

-

□ Are there any other potential biases during the implementation of the measurements?

Accurate: Free of systematic error

Recall Bias

simple

-

□ Does the information come from the memory of study participants?

-

□ Or measurements by the study investigator(s)?

-

□ Or both (Random error)?

Reporting Bias

simple

-

□ Can information arise from obligation/willingness vs. hesitation to report?

-

□ Or is the sought information personal or sensitive?

Precise: A minimum/acceptable variation

simple

-

□ Variation in the direction of data collection.

-

□ Inaccurate measurement by the testing instruments.

-

□ Inconsistent data processing or both (random error).

Implementation Error

simple

-

□ An incomplete follow-up can distort the conclusions.

-

□ Individuals excluded in the final assessment had a different frequency of outcome.

-

□ Unequal intensity of observation of two study groups.

-

□ Inaccurate measurement by the testing instruments.

Box 3. Study Quality

How do differences in the quality of studies justify excluding low-quality studies from high-quality studies from the meta-analysis?

simple

-

□ Are the quality scores obtained by at least two researchers?

(Masked to the identities of the authors)

-

□ Did the study use only older patients, severely ill patients, or other characteristics or prognostic factors?

Box 4. Publication Bias

simple

-

□ Occurs when large randomized controlled studies are selected in meta-analysis.

-

□ Small investigations and negative or similar results are frequently unreported.

-

□ Wide variations occur in the sample sizes vs. outcome measures.

(e.g., the odds ratio in the study group is compared with the odds ratio in the control group).

-

□ Confirm that the extensive search obtained all the relevant studies.

Conclusion

The predefined criteria for data review/assessment can reduce the likelihood of bias and obviate the need to include all confounding variables in the context and outcome measures, study endpoints, and/or dataset interpretations. Although retaining all relevant studies in the systemic reviews and meta-analysis can provide a complete demonstration of the data evaluation, it is unlikely that excluding the outliers will necessarily de-bias evaluations unless the characteristics of the differences in the variables are determined based on verifiable quantitative approximations.

Ethics statement

Not applicable.

Conflict of interests declaration

None.

Disclaimer

The statements in this perspective represent the author’s view and understanding from the cited references and are intended solely for the advancement of the data review and assessments. The author has no conflict of interests.

References

- Pierson E. Accuracy and equity in clinical risk prediction. N Engl J Med 2024; 390(2):100-2. doi: 10.1056/NEJMp2311050 [Crossref] [ Google Scholar]

- Delichatsios HK. Studying a study and testing a test: how to read the medical evidence. Ann Intern Med 2000; 133(9):760. doi: 10.7326/0003-4819-133-9-200011070-00040 [Crossref] [ Google Scholar]

- Reigelman RK, Nelson BA. Studying a Study and Testing a Test. 7th ed. USA: LWW; 2020.

- Rezabakhsh A, Mojtahedi F, Tahsini Tekantapeh S, Mahmoodpoor A, Ala A, Soleimanpour H. Therapeutic impact of tocilizumab in the setting of severe COVID- 19; an Updated and comprehensive review on current evidence. Arch Acad Emerg Med 2024; 12(1):e47. [ Google Scholar]

- Rezabakhsh A, Manjili MH, Hosseinifard H, Sadaie MR. Causes of autoimmune psoriasis and associated cardiovascular disease: roles of human endogenous retroviruses and antihypertensive drugs—a systematic review and meta-analysis. medRxiv [Preprint]. November 24, 2023. Available from: https://www.medrxiv.org/content/10.1101/2023.11.24.23298981v1.full.